How my grandparents interact with GLASS, showed me that Google seems to be onto something.

As there were a lot of researchers visiting for the Ubicomp /ISWC conferences, I could grab a Google Glass from one of the participants for a week. Thanks to the anonymous

donor (to avoid speculations it was none of the people mentioned below).

In the following some unsorted thoughts ... Sorry for typos etc. It's more a scratch pad so I don't forget the impressions I had.

##First Impressions##

The Glass device feels expensive and a little bit futuristic.

I’m impressed by the build quality and design. It has also a “google” feel to it, e.g. “funny” jokes in the manual (“don’t use glass for scuba diving …”).

The display works extremely well and although glass is made for micro-interactions (quickly checking an email/sms, google now updates, making pictures), I could watch videos and read longer emails/documents on it without trouble and any sight problems (I experienced no headaches as happend with other setups, see below). It would be perfect for boring meetings if other people could not see what you are doing …

I assume the Glass design team made the conscious decision, to let other people know if you interact with glass. People can see if the screen is on and even recognize what’s on the screen if they get close enough.

##Grandparents and Mother with Google Glass##

I have a basic test for technology or research topics in general.

I try to explain it to my grandparents and mother to see if they understand it and find it interesting.

Various head-mounted displays, tablets and activity recognition algorithms were tested this way …

E.g. they were not so big fans of tablets/slates or smart phones until they played with an iPhone and iPad.

Surprisingly, my grandparents did not have the reservation they have towards other computing devices.

Usually, they have the feeling that they could destroy something and are extra careful/hesitant.

Yet, Google Glass looks like glasses, so it was easy for them to setup and use.

The system worked quite well (although so far only English is supported), speech recognition

and touch interface were simple to learn after a quick 5 min. introduction. I was surprised myself …

Sadly, the speech interface does a poor job with German names, e.g. googleing for “Apfelkuchen Rezept” (Apple cake recipe) did not work as intended.

Yet, both of them saw potential in Glass and could imagine wearing it during the day.

I was most astound by the application cases they came up with.

My grandfather took a picture of his pills he needs to take after each meal.

He told me, he always wonders if he has taken them or not and sometimes checks 2-3 times after

a meal to be certain. Taking a picture and using the touch panel to browse recent pictures (with timestamp), he can easily figure out when he took them the last time.

My grandmother would love to use Glass for gardening. It happens sometimes, that she gets a

phone call during garden work and then she has to change shoes, take of gloves etc. and hurry

to the portable phone. Additionally, she likes to get the advice of my mum or friends about

where to put which flower seeds etc. so she asked me if it’s possible to show the video stream from

Glass to other people over the Internet :)

We also did a practise test, My grandmother and mother wore Glass during shopping in Karlsruhe.

Both of them wear glasses, so not too many people noticed or looked at them. I think they assumed it’s some kind of medical device or sight improvement etc.

My mother used the time line in glass to track when she made the pictures and traced back when she saw something nice to figure out at which store the item was. She tried taking pictures of price tags. Unfortunately, the resolution on the screen is not high enough

to read the price, yet this could be easily fixed with a zoom function for pictures.

Interestingly, she also carries a smart phone, yet she never got the idea to use it for shopping like Glass.

##Public Reactions##

As mentioned my mum and grandmother wore Glass nearly unnoticed.

This is quite different to my experience … If I wear it in public, most people in Karlsruhe

and Mannheim (the two cities I tried) eyed at me with wary faces (you can see the questions in their eyes : “What is he wearing ?? Some medical device ?? NERD!! “). This was particularly bad

when I spoke with a clerk or a person directly, as they kept staring at Glass instead of looking into my eyes ;)

Social reception was better when I was with my family. Strangely, people asked mostly my grandmother

what I was wearing. Very few approached me directly.

Reactions fell into 3 broad categories:

- “WOW Cool … Glass! How is it? Can I try??” – Note : Before it’s released in public, I strongly recommend not wearing it on any campus with a larger IT faculty. I did not account for that and it was quite difficult to get over Karlsruhe University Campus :)

- “Stop violating my privacy!” – During the week I had only one person directly approach me about privacy concerns. The person was quite angry at first. I believe it’s mostly due to misinformation (something Google needs to take serious), as he believed Glass would stream automatically everything to Google and listen to all the conversations etc.. After I showed him the functionality of the device, how to use it and how to see if somebody is using it, he was calmer and actually liked it (could see the potential of a wearable display).

- “What’s wrong with this guy?” – Especially if I was traveling alone people stared at me. I asked 1 or 2 of the most obnoxious persons starring at me about it and they answered they thought I was wearing a medical device and they wondered “what’s wrong with me” as I looked otherwise “normal”.

##Some Issues##

The 3 biggest issues I had with it:

- Weight and placement - You need to get used to its weight. As I’m not wearing prescription glasses, it feels strange to me wearing something on my nose. It’s definitely heavier than glasses. After a couple of hours it is ok. Also it’s always in your peripheral view, you need to get used to it.

- Battery life - Ok, I played a lot with it, given I could use Glass only for a week. At the end (when me playing with it got fewer) I could get barely a day of usage. I expect that’s something they can easily fix. Pst… you can also plug-in a portable USB battery to charge during usage :)

- Social acceptance - This is the hardest one to crack. Having used Glass, I don’t understand most of the privacy fears people raise. It’s very obvious if a person is using the device/taking a picture etc. If I want to take covert pictures/videos of people, I believe it’s easier to do with today’s smart phones or spy cameras (available on Amazon for example) …

##Some more Context##

When I unboxed Glass, I remembered how Paul, my phD. advisor, and Thad (Glass project manager)

chatted about how in future everybody would wear some kind of head-mounted

display and a computing device always connected to the

Internet, helping us with everyday tasks - augmentations

to our brain.

In the past, Paul was not a huge enthusiast about wearable displays and I agreed

with him. I attempted to use the MicroOptical (the display used by Thad) several times and had always terrible headaches afterwards … Just not for me.

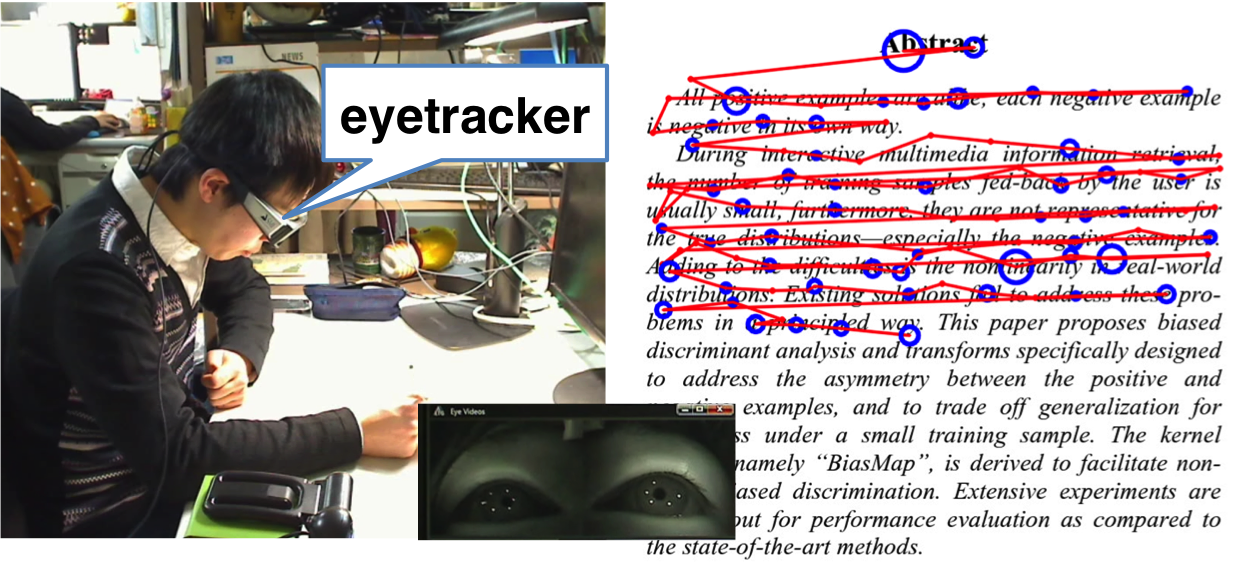

Around 2004 - 2010, I played with various wearable setups to use during everyday life during my phD. each only for a week or couple of days. If you work on wearable computing you have to try at least. As seen in the picture above, the only setup working for me was a Prototype HMD from Zeiss with the

Qbic, an awesome belt-integrated linux pc by ETH (black belt buckle in the picture), and Twiddler 2. Yet, I stopped using it as the glasses were quite heavy, maintaining/adjusting the software was a hassle (compared to the advantages) and -I have to admit- due to social pressure, imagine living as a cyborg in a small Bavarian town, mostly occupied by law and business students … I found my small, black, analog notebook more handy and less intimidating to other people. Today, I’m an avid iPhone user (Things, Clear, Habit List, Textastic, Prompt and Lendromat …).

##To sum up##

In total I was quite sceptical at first, the design reminded me too much on the Microoptical and the headaches I got using it. Completely unfounded! Even given the social acceptance issue, I cannot wait to get Glass for a longer test. However, I really need a good note taking app, running vim on glass would already be a selling point for me, replacing my black notebook (and maybe smart phone?). I undusted my Twiddler2 (took a long time to find it in the cellar) with hacked bluetooth connection, started practicing again and hope I can try it soon with Vim for Glass :D This is definitely not an application case for the mass market … My grandparents told me that they believe there is a broader demand for such a device also by “normal” people (they actually want to use it!). So let’s see.

Plus the researcher in my cannot wait to get easy accessible motion sensors onto the heads of a lot of people. Combined with the sensors in your pocket it’s activity recognition heaven!

Let’s discuss on Hacker News if you want.