A research concept from 2016 might be in the spotlight in the next years, as described and discussed in the Dagstuhl Seminar “Eyewear Computing – Augmenting the Human with Head-mounted Wearable Assistants”.

With the advent of Apple Vision Pro, it seems timely to reflect on some of our earlier research directions. Eyewear Computing could go from an academic niche (e.g. Eyewear Computing Workshops at UbiComp) to becoming mainstream in the next years, if Apple succeeds in establishing their platform. Yet, there are also a tremendous amount of challenges. In the long term smart eyewear needs to become more like regular glasses.

Regular, analog glasses are already a great cognitive augment to us. If we put them on, we can see more clearly and, after a while, forget that we even wear them. Yet, even after the invention of what is considered the first digital, programmable computer (the ENIAC) 78 years ago, our digital tools are still lacking behind. PCs, smart phones and watches are distracting us, they are not the “Invisible Computing” that was promised to us.

To enable digital augments, tools that seamlessly help us in everyday tasks, the most critical limitations are no longer CPU speed, RAM, hard-drive space. The most critical are our cognitive limitations: our attention span, memory, and other cognitive properties. To understand these better, activity recognition for our mind is essential, towards A Cognitive “Quantified Self” or in other words Cognitive Activity Recognition.

As we are in an Artificial Intelligence (AI) revolution right now, it removes one of the largest barriers for cognitive activity recognition: the reliable and correct classification of cognitive states. Given just large enough datasets, the first cognitive foundation models will emerge, similar to what we see today in foundation models for language prediction (OpenAI’s ChatGPT, Meta’s LLaMA) and for image generation (Dallee 2, Midjourney, Stable Diffusion).

Yet, the question remains what would be a decent concept and platform to first gather all this data and second provide meaningful interactions taking cognitive states into account. In terms of concept, Eyewear Computing seems to fit perfectly. As most of our senses are on the head, smart glasses could be the perfect cognitive assistants, simultaneously sensing cognitive states and providing cognitive aware interactions.

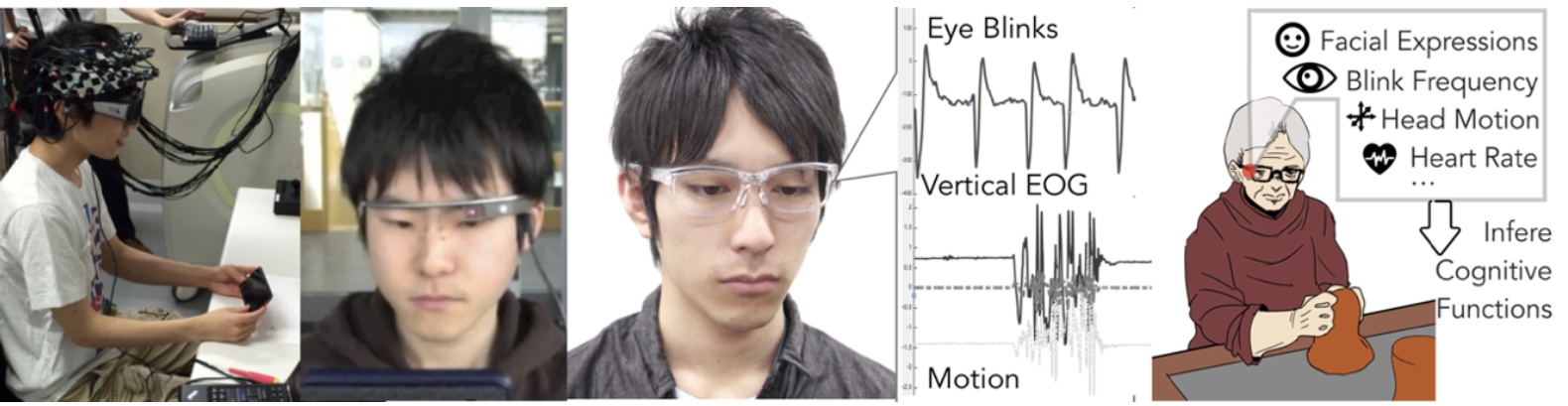

In terms of platforms, Google Glass was a first very interesting exploration of the Eyewear Computing space. Implementing a simple Blink detection, we can already receive some relevant information regarding cognitive states. Blinking not only keeps the eye lubricated, yet it’s also related to cognitive tasks, might help to disengage our attention.

The next platform, we explored in the Eyewear Computing space is J!NS MEME. MEME is fascinating, as it’s not a full-fledged computer compared to Google Glass. MEME is a lightweight glass (ca. 35 grams) and simple sensing device connected to a smartphone. It contains inertial motion sensors (accelerometer and gyroscope). Additionally, 3 electrodes are embedded in the nose bridge. With these three electrodes one can recognize eye movements Electrooculography. These lightweight glasses enable a completely new sensing and interaction space. They can be used to track alertness over the day. We can even use the electrodes to detect subtle nose touching gestures.

Yet, each of these platforms had their limitations. For google glass, it didn’t provide any sophisticated sensors for cognitive activity recognition and feedback was limited to rather small screen and bone-conducting audio on one side only. J!NS MEME has no means to output information, interactions need to go over a secondary device (smartphone).

With the Advent Apple Vision Pro, we still know very little about the specifications. Yet, from early feedback, the see-through optical display seems to be amazing with very little lag. In terms of sensing, it provides definitely camera based eye-tracking. The cameras are probably also used to enable facial expression tracking. I’m curious about the hardware setup of the, maybe the Vision Pro also contains some infra-red distance sensors. It’s possible to detect the 8 universal facial expressions with relative simple smart eyewear.

If Apple Vision Pro succeeds, it could usher in a renaissance for Eyewear Computing and a revolution in understanding our cognitive tasks and perfromance.